Last month, our article on social purpose was published in Organization Science (open access version). With the study finally out, we decided to run a small experiment: if we had used Skimle for the qualitative analysis, would the study have looked different? We fed Skimle key parts of our data and compared its output with the analysis in the published paper. The exercise was instructive: it revealed what AI can strengthen in qualitative research, where it (still) falls short, and what features we need to add to Skimle next.

Our theory-building study: Social purpose formation

Like many journal articles, our study, Social Purpose Formation and Evolution in Nonprofit Organizations, was years in the making. We began collecting data in 2017, initially focusing on social organizations responding to the European refugee crisis by supporting the integration of asylum seekers into Finnish economy. Quite quickly, one volunteer-driven nonprofit stood out. We call it Inclusion (a pseudonym). With two driven founders and a committed base of volunteers, Inclusion pursued an ambitious goal: helping refugees and immigrants enter the tech sector and even build ventures of their own.

We didn't initially set out to study social purpose. Rather, our first framing focused on organizational identity and emotions, asking why volunteers became so strongly attached to what they saw as the organization's "core". Our analysis highlighted how different people were emotionally drawn to specific aspects of the organizational identity that they saw as the most central, which we called the "emotional locus" of identity. We submitted that manuscript to Academy of Management Journal in 2020, and it was rejected after one round of revisions, it was painful but helped us push our thinking forward. In the end, it was difficult to organize the empirical data around emotions, particularly without sustained direct observations.

After a hard rethink, we abandoned our focus on identity and focused on social purpose. The concept captured our empirical observations better by connecting strongly to emotions and sense of meaningfulness. And while the corporate purpose conversation was burgeoning, the nonprofit sector where purpose is arguably the whole point, had received surprisingly little attention.

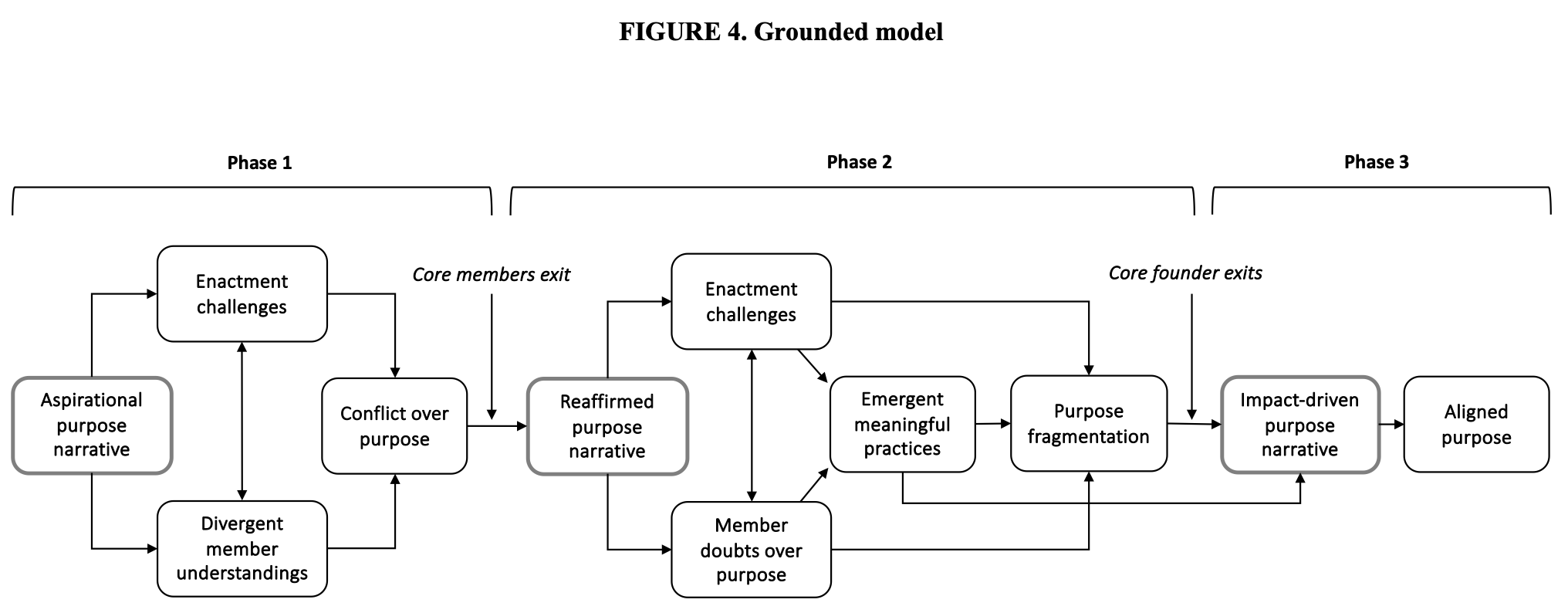

We re-read our data through this lens and realized that purpose took three somewhat interrelated forms: leaders' narratives of purpose, members' internalized understandings, and organizational practices aimed at social impact. We then noticed that the same elements had been earlier articulated by George et al. in Journal of Management article. Instead of reinventing the wheel, we used their work as a scaffold and focused on explaining how social purpose emerges and takes shape over time.

This became the core of our contribution. It speaks directly to nonprofit leadership and to how front-line members shape (and sometimes reshape) the mission or purpose in nonprofit organizations. Here is the final model:

Automated thematic analysis with Skimle

To test how Skimle would have helped us, we uploaded all 53 interviews and ran its Automated Thematic Analysis. This is Skimle's proprietary "zero prompts" feature: it scans interviews, identifies interview questions, generates broad themes related to those questions, and then searches the full dataset for relevant content related to each theme.

This feature is pretty useful because it keeps the analysis anchored in researcher-relevant topics, surfaces cross-interview themes, and consolidates evidence for each theme at scale.

Our main challenge, however, was coherence. Our interview guide shifted quite a bit over time: The first interviews were conducted in 2017, the last in 2023! The interviews were semi-structured, and several researchers conducted them, each with their own style and follow-up questions. We also narrowed our analytical focus to social purpose only after many of the interviews had already been completed. The task was therefore clear: Could the AI retrieve and organize relevant material from more than fifty interviews in a way that still made analytic sense?

Unsurprisingly, Skimle generated 30 different themes to capture the full scope of our data. Below are the fifteen themes with the most insight:

| Theme | Number of insights |

|---|---|

| Value Proposition, Impact, and Outcomes | 164 |

| Target Audience, Inclusivity, and Participant Characteristics | 160 |

| Organizational Mission, Identity, and Purpose | 158 |

| Program Content, Services, and Offerings | 156 |

| Background, Role, and Personal Journey | 139 |

| Organizational Structure, Staffing, and Capacity | 138 |

| Organizational Change, Evolution, and Strategic Direction | 134 |

| Personal Motivation, Values, and Job Satisfaction | 127 |

| Challenges, Frustrations, and Areas for Improvement | 122 |

| Business Model, Revenue, and Financial Sustainability | 111 |

| Partnerships, Collaboration, and Ecosystem Relationships | 110 |

| Decision-Making, Governance, and Leadership | 103 |

| Stakeholder Perception, Communication, and External Relations | 103 |

| Future Vision, Scaling, and International Expansion | 98 |

| Practices, Processes, and Program Development | 93 |

The themes created by Skimle cover most of the topics across our analyses. Having this analysis available for all co-authors would have helped us get up to speed.

That said, some categories did not make sense from our perspective: for example, the AI merged "future vision", a central element of our study, with "international expansion", a minor initial aspiration that never materialized. And once you know the case, several themes felt redundant, such as "Practices, Processes, and Program Development" and "Program Content, Services, and Offerings". Luckily, Skimle enables users to merge overlapping categories easily.

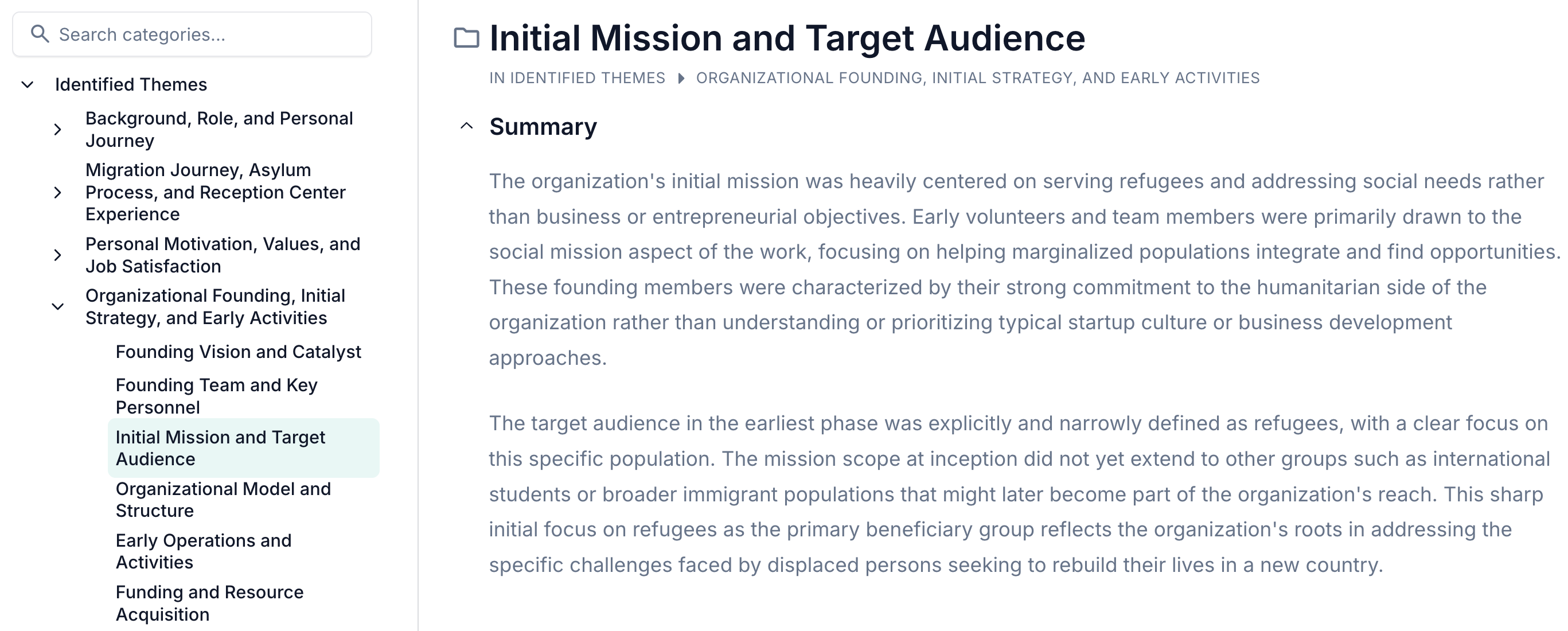

Browsing Skimle's output in more detail, we were struck by how close the AI-generated thematic analysis came to our own. In many places, it picked up the very same quotes we had used in our manuscript to illustrate key themes. The theme summaries (like the one above), provide an accurate but also partial view of the data. For instance, while the summary in the figure correctly describes the views of early members, it hides the fact that the founders' initial intent was to serve a broader target audience.

Additional analyses support our grounded model

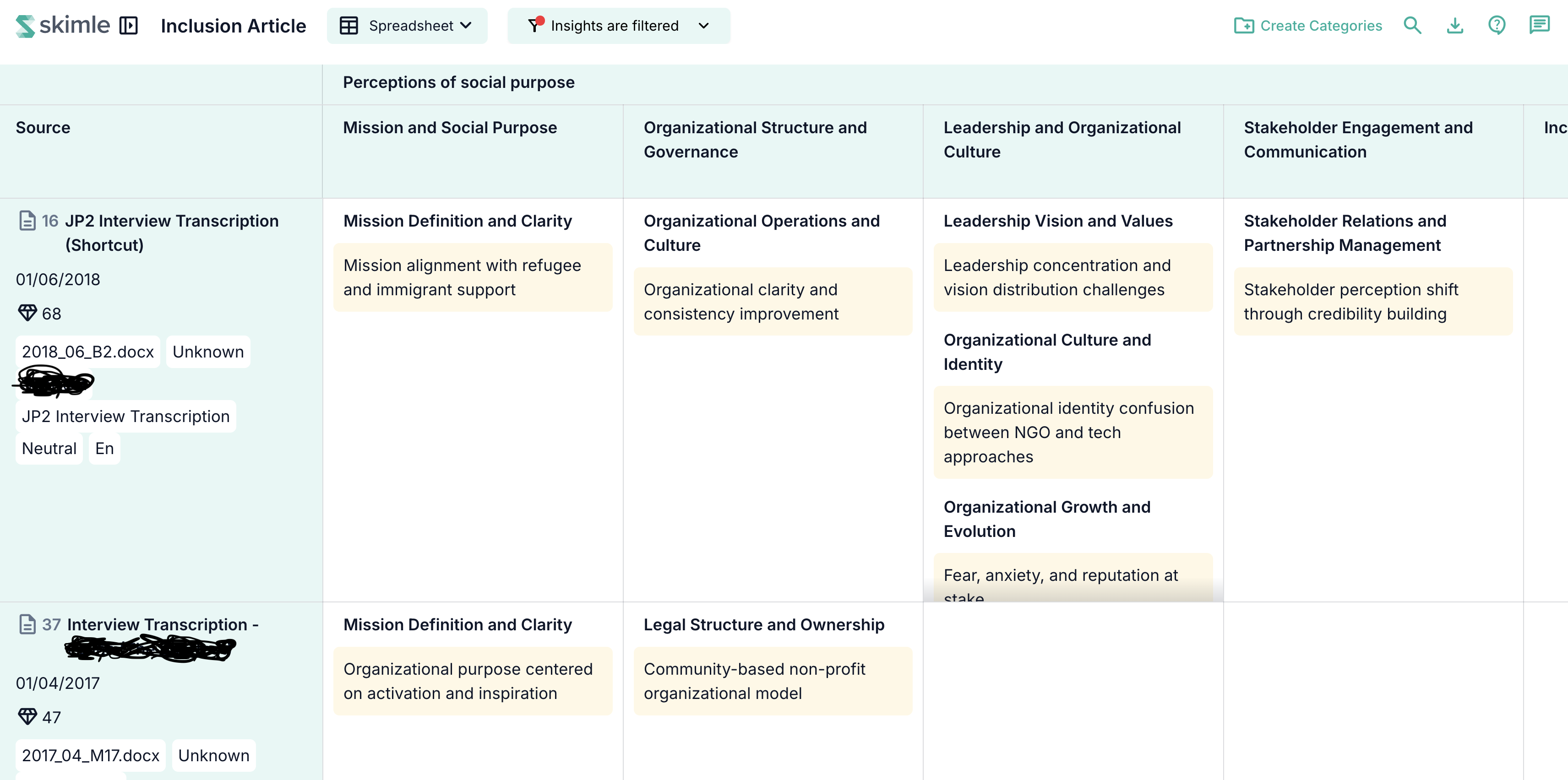

A thematic analysis of interviews can only go so far: it cannot capture broader dynamics in a case. In our case, this meant that the initial output did not surface our central theoretical claim that social purpose in nonprofits can be significantly shaped by the efforts of front-line employees and volunteers as they respond to beneficiaries' needs. To capture these dynamics, we created two new insight types, "Social impact and related practices" and "Perceptions of social purpose". In Skimle, an insight type works like a broad theme that guides the AI to identify relevant passages across the provided materials and then organize them into a hierarchical category structure. Below is a screenshot of the category structure Skimle generated for "perceptions of social purpose" from the spreadsheet view.

Chatting with our data

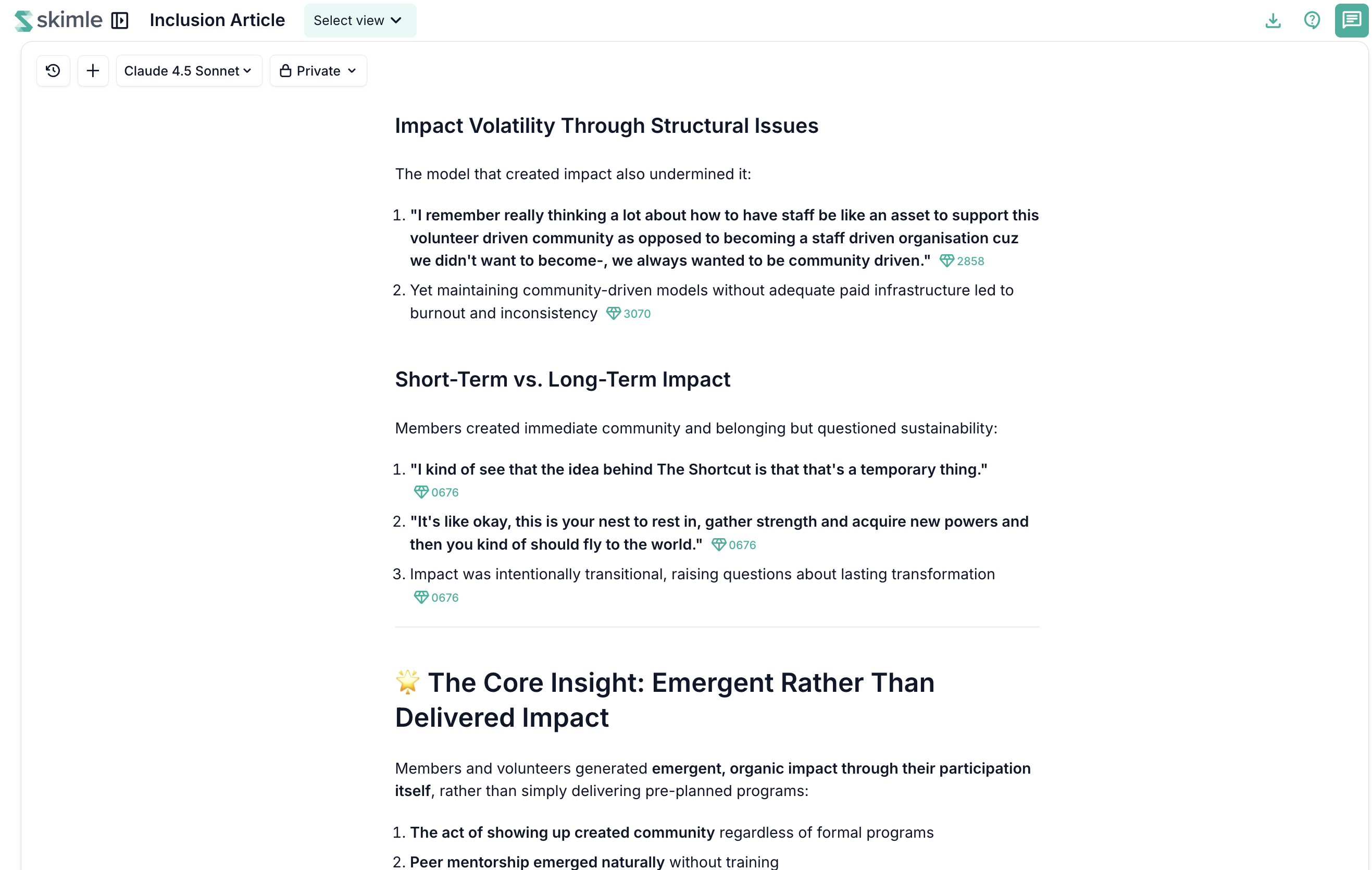

Like nearly all AI products these days, Skimle also has a chat interface. While chat is not really suited for systematic data analysis, it is an intuitive and quick way to check whether the empirical data supports emerging intuitions and research ideas.

We used it to probe a core finding of our study: the role that front-line employees and volunteers in redefining the organization's purpose. As with any chat-based query, the wording matters: different formulations retrieve different slices of the same corpus. After a few rounds of refinement to make the question more precise, we landed on: "What type of impact did members and volunteers develop?" This prompt consistently pulled together evidence that spoke directly to our argument.

(The actual response was multiple pages long.)

An unexpected problem: flattening our longitudinal story

Overall, the data categories generated by Skimle were relevant, but suffered from the same limitation: dynamic changes over time got flattened. The categories contained correct elements, but they also bundled together views that were separated by years, sometimes directly contradicting each other. At worst, category summaries appeared incoherent as they combined nearly opposite views from 2017 and 2021 without attending to the very different stage of the nascent organization. A related but less prominent issue was the inclusion of quotes from different roles mixed into a single category, featuring divergent views from front-line volunteers and the founders. Aggregating quotes on "one topic", the AI model creates a smooth narrative at the cost of the very distinctions at the center of our analysis.

This points to a clear development priority for Skimle in Spring 2026: the ability to filter and organize analyses based on metadata, such as the type of informant (in our case: employees, volunteers, founders, board members, and other stakeholders) and the date of the interview. In the near future, we should be able to leverage Skimle to surface distinctive patterns over time and across informants both within a single category and across categories. By showing how different themes shift over time and differ across roles, AI can immediately surface interesting patterns across interviews that often take time for a human researcher to notice.

Concluding thoughts: What if...?

In hindsight, Skimle picked up most of the themes we ultimately developed and then some. It also highlighted several aspects we had gradually set aside during the long writing process. One example is the organization's widespread use of unpaid interns prior to Covid-19 pandemic. While we noticed and discussed it, the issue faded from view toward the end of the research project. Had we had Skimle, we may well have included it in our final version.

Intuitively, it feels as though Skimle would have significantly sped up our research process and helped the author team get on the same page more quickly on what is in the interviews and evidence behind each theme. Instead of focusing on organizational identity, we might have recognized earlier that purpose (and its close cousin, mission) was a central thread running through the data. In fact, the insight type perceptions of social purpose content was strikingly similar to the manual coding we spent days producing ourselves.

Hindsight is always hindsight, of course. While AI would definitely have saved a lot of time, whether it would have led to a better outcome depends on how we would have used it. The risk is clear: if we had realied heavily on the initial AI analysis, we might have downplayed the important longitudinal story in our data. At the same time, having conducted the interviews ourselves and witnessed the changes in the organization's purpose in both rhetoric and practice over the years, this seems unlikely.

More broadly, the limitations we encountered were instructive. AI can dramatically speed up retrieving and organizing data, but it augments rather than automates the researcher's core work: deciding what matters in the case, making sense of tensions, and making the theoretical leap that turns material into an argument. As a companion rather than a substitute, Skimle felt genuinely valuable: it helped us map the corpus quickly, resurface sidelined threads, and test emerging interpretations against the data. As Skimle adds more features, the biggest gains will come from combining AI's scale with human judgment. AI is great at surfacing quotes and producing alternative interpretations. Only a human can tell which ones are interesting and combine those into a compelling theoretical explanation.

About the Authors

Farah Kodeih is Professor of Strategy and Social Sustainability at IÉSEG School of Management (Paris). Her research explores how organizations and individuals respond to institutional instability and change—focusing on repression, exclusion, democratic backsliding, and forced displacement.

Henri Schildt is Professor of Strategy with a joint appointment at the Aalto School of Business and the School of Science. His research interests span artificial intelligence, strategic change, and social sustainability. Henri is also co-founder and CEO of Skimle.com.